Quick Key is a tool that I use regularly to provide me with detailed feedback on student understanding. I wrote about it briefly in this post about technology that makes my life easier, but I thought that it merited a more detailed explanation.

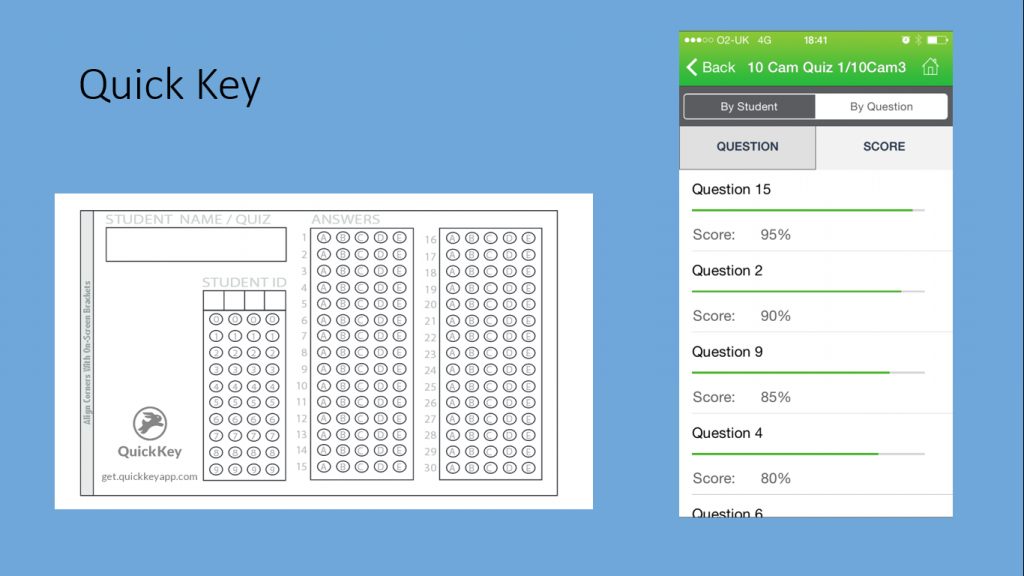

First of all, Quick Key is an app for IOS and Android. It is free, but there is a paid version which allows you to do more. It is still incredibly cheap compared to other tech tools. You set a multiple choice quiz, students fill in one of the tickets and then you scan the tickets in using your phone. Students don’t need to have a device themselves- just a pencil. It takes very little time to create quizzes and very little time to scan them in. On your phone, you can sort the questions to find the ones that students struggled with and sort students by score also. This is great, but when you go to the website, you can do much more with the data, including exporting itemised information.

First of all, Quick Key is an app for IOS and Android. It is free, but there is a paid version which allows you to do more. It is still incredibly cheap compared to other tech tools. You set a multiple choice quiz, students fill in one of the tickets and then you scan the tickets in using your phone. Students don’t need to have a device themselves- just a pencil. It takes very little time to create quizzes and very little time to scan them in. On your phone, you can sort the questions to find the ones that students struggled with and sort students by score also. This is great, but when you go to the website, you can do much more with the data, including exporting itemised information.

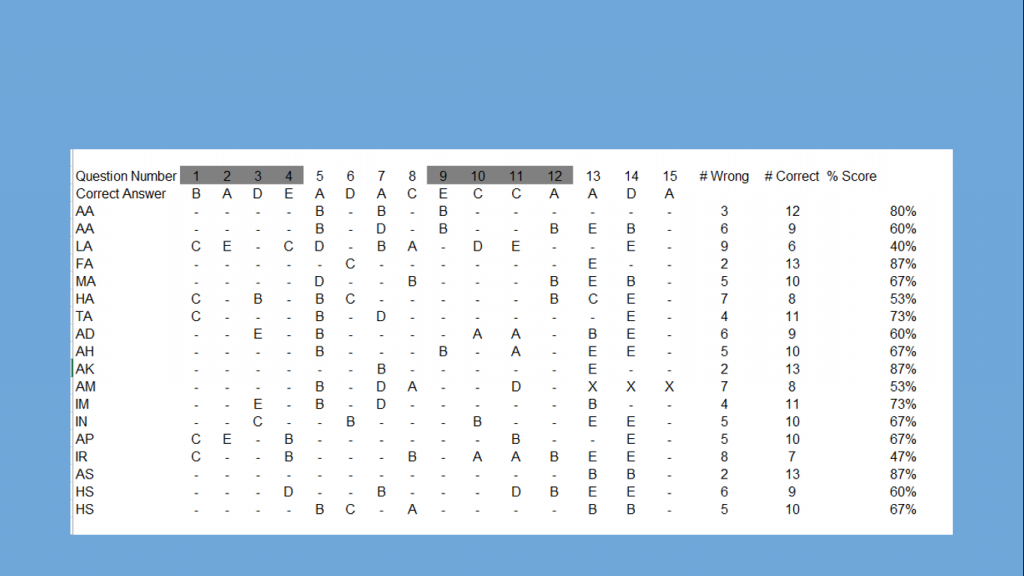

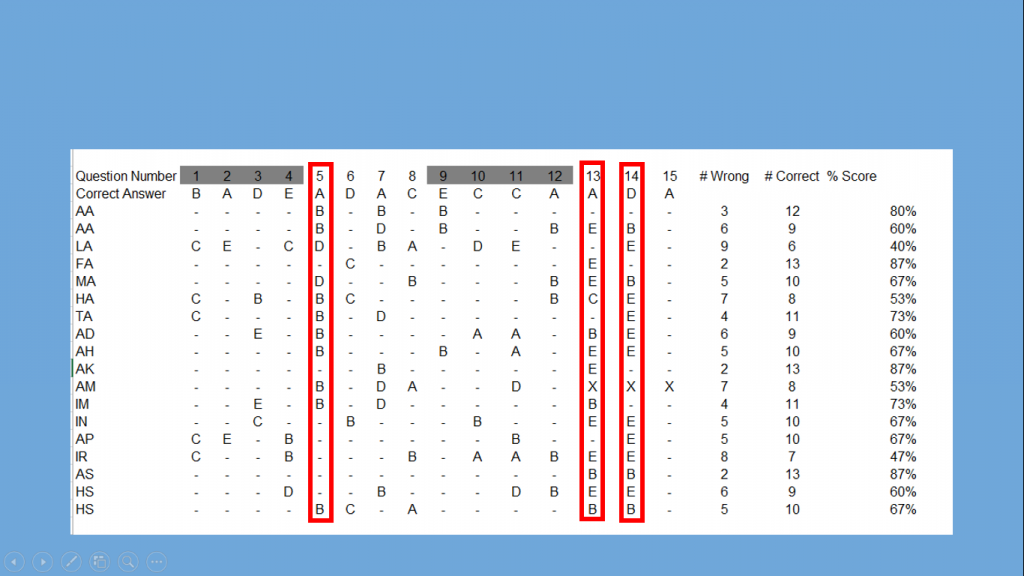

Here is the question level data from a recent quiz. The quiz was taken by a year 10 class and was on everything that they have studied this year. They should be able to answer every question as everything has been covered. That doesn’t mean that it has been learnt, however. A quick explanation of what you can see. Student names are down the left, scores down the right. Question numbers and correct answers are along the top. (I have shaded questions on the same topic together to help my analysis.) When there is a dot, this indicates that they answered it correctly, a letter indicates a wrong answer and the option chosen, while an X means that a question wasn’t answered (or wasn’t scannable).

Here is the question level data from a recent quiz. The quiz was taken by a year 10 class and was on everything that they have studied this year. They should be able to answer every question as everything has been covered. That doesn’t mean that it has been learnt, however. A quick explanation of what you can see. Student names are down the left, scores down the right. Question numbers and correct answers are along the top. (I have shaded questions on the same topic together to help my analysis.) When there is a dot, this indicates that they answered it correctly, a letter indicates a wrong answer and the option chosen, while an X means that a question wasn’t answered (or wasn’t scannable).

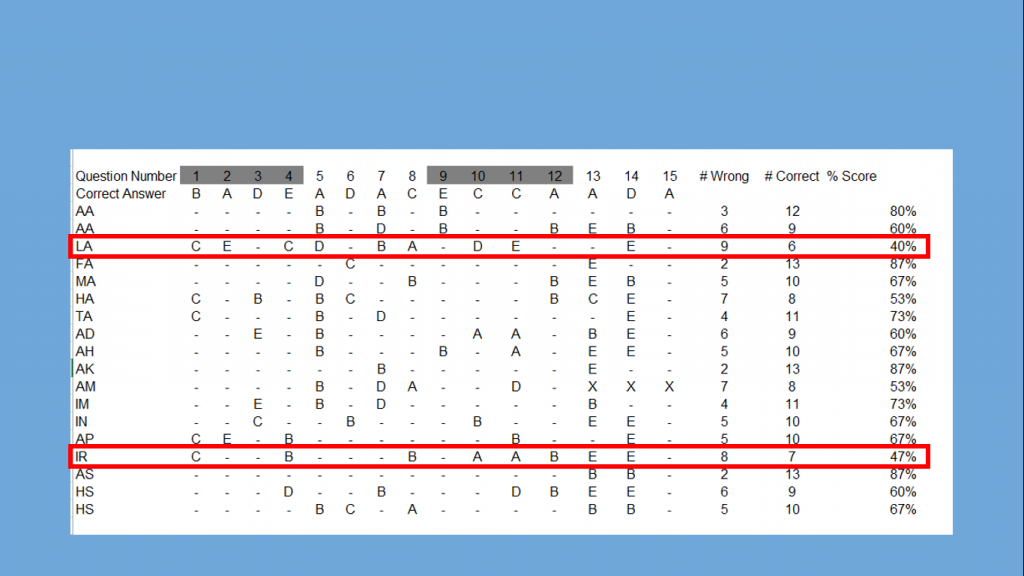

I have identified the two lowest scoring students here. If I was only interested in scores, I would just give each extra work and be done with it. However, on closer inspection I can see that the first student has performed poorly across all topics, which is quite consistent and is borne out by classwork too. But the other student has actually answered a number of the first questions correctly (1-8 were all on language terminology) and is not underperforming relative to his peers. It is later questions where he has struggled. From this information, I can work out a couple of possibilities: 1) The student doesn’t know the poems from the anthology well, particularly Ozymandias and London. 2) Perhaps the student struggled to answer all of the questions in the given time. My next step? Extra revision on these two poems for homework; extra time on the next quiz.

I have identified the two lowest scoring students here. If I was only interested in scores, I would just give each extra work and be done with it. However, on closer inspection I can see that the first student has performed poorly across all topics, which is quite consistent and is borne out by classwork too. But the other student has actually answered a number of the first questions correctly (1-8 were all on language terminology) and is not underperforming relative to his peers. It is later questions where he has struggled. From this information, I can work out a couple of possibilities: 1) The student doesn’t know the poems from the anthology well, particularly Ozymandias and London. 2) Perhaps the student struggled to answer all of the questions in the given time. My next step? Extra revision on these two poems for homework; extra time on the next quiz.

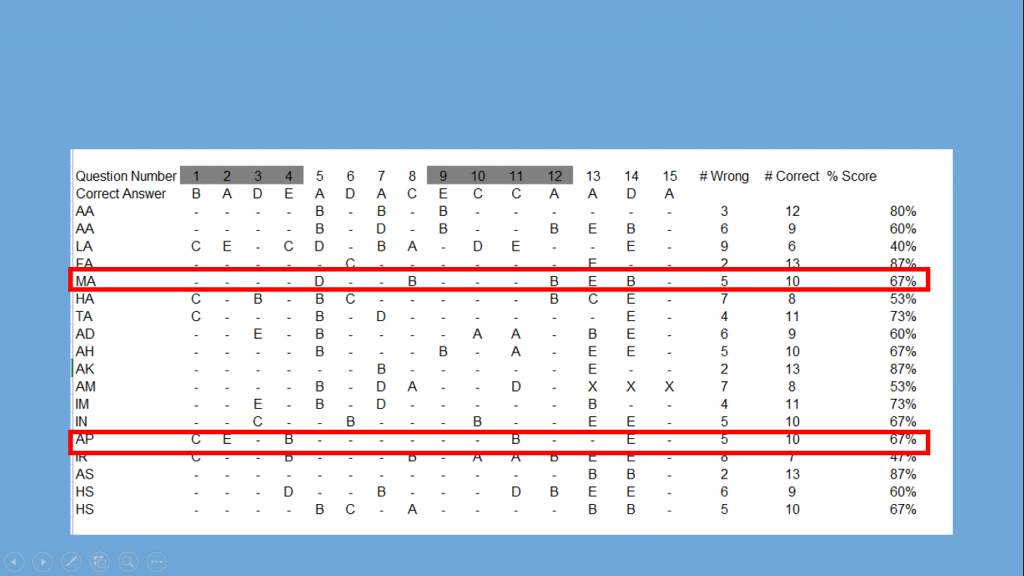

This question level analysis allows us to identify the differences between pupils who at first seem to be performing at an identical level. If we look at these two students who both achieved 67%, we can see that, with the exception of question 14, they answered completely different questions incorrectly. If we said that 67% equalled a C and put these two students in a C grade intervention group, neither of them would get exactly what they needed! Question level analysis of this kind allows us to really know what individual students need.

This question level analysis allows us to identify the differences between pupils who at first seem to be performing at an identical level. If we look at these two students who both achieved 67%, we can see that, with the exception of question 14, they answered completely different questions incorrectly. If we said that 67% equalled a C and put these two students in a C grade intervention group, neither of them would get exactly what they needed! Question level analysis of this kind allows us to really know what individual students need.

Now let’s move from students to questions. 13 and 14 were answered poorly across the board. This obviously identifies a gap in knowledge and in this case it tells me that students don’t really understand what the exam will look like. They were ‘taught’ this, but I obviously didn’t do as good a job as I thought. This is good information to have because it is easily addressed on a whole class level.

Now let’s move from students to questions. 13 and 14 were answered poorly across the board. This obviously identifies a gap in knowledge and in this case it tells me that students don’t really understand what the exam will look like. They were ‘taught’ this, but I obviously didn’t do as good a job as I thought. This is good information to have because it is easily addressed on a whole class level.

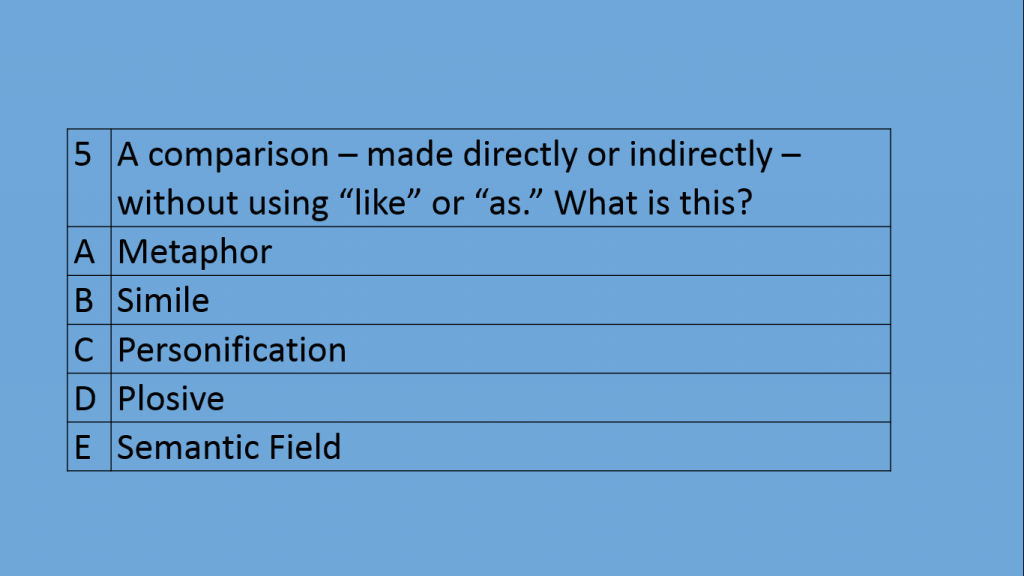

But what about question 5? This surprised me, as this question was designed to show that students understood what a metaphor was. And in class, they can do this. But a look at the question itself gives me a clue. I can make an assumption here that a number of students just saw “like or as” and thought simile. From this I can work out a couple of things: 1) My students need to read questions carefully and 2) I need to have a better explanation for similes than “it is a comparison using like or as”.

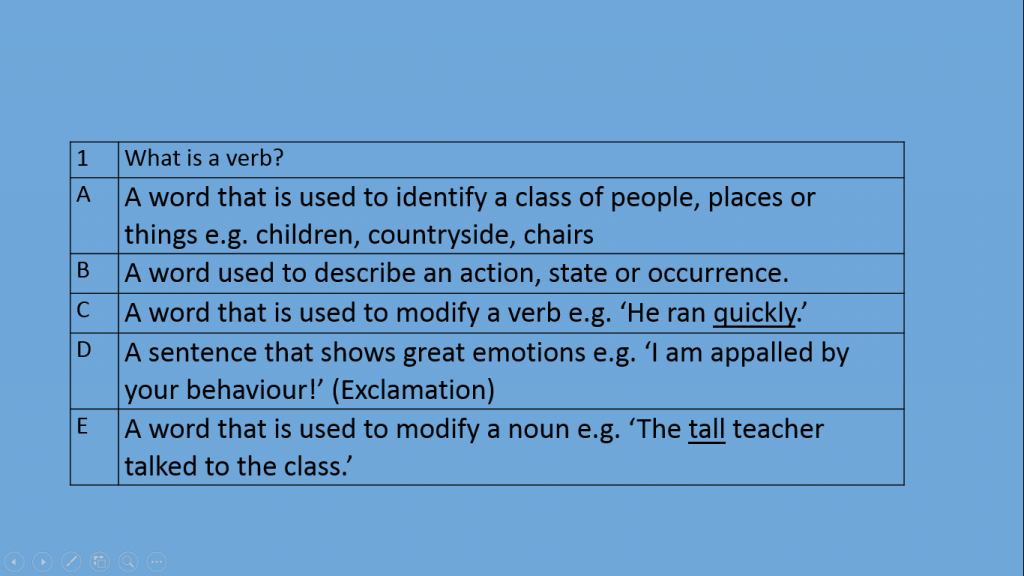

But what about question 5? This surprised me, as this question was designed to show that students understood what a metaphor was. And in class, they can do this. But a look at the question itself gives me a clue. I can make an assumption here that a number of students just saw “like or as” and thought simile. From this I can work out a couple of things: 1) My students need to read questions carefully and 2) I need to have a better explanation for similes than “it is a comparison using like or as”.  I pulled on this thread and looked at question 1- why did students not answer this correctly when I expected them to? Once again, a quick check makes me think that students saw “verb” and jumped straight to C without really reading the question. It may of course simply tell me that they don’t know what a verb is and that option was an understandable guess. Both of these questions flag up that some students can identify terminology and give examples, but can’t define them.

I pulled on this thread and looked at question 1- why did students not answer this correctly when I expected them to? Once again, a quick check makes me think that students saw “verb” and jumped straight to C without really reading the question. It may of course simply tell me that they don’t know what a verb is and that option was an understandable guess. Both of these questions flag up that some students can identify terminology and give examples, but can’t define them.

I could delve deeper into this data but I think the point of its usefulness is made. The quiz took maybe 30 minutes to create, 10 minutes to complete and 5 minutes to scan. Yet the information it has given me is perhaps more valuable than marking a set of books, something which would have taken considerably more time.